This Acoustic Gesture Recognition by Gary Halajian and John Wang from the Cornell University Designing with Microcontrollers course reminds me of the Object Scratching Input Device that Chris Harrison made (I later read the references and see that sure enough they reference the Object Scratching Input Device Project).

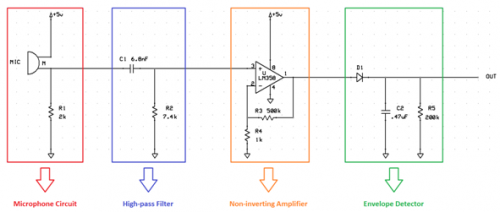

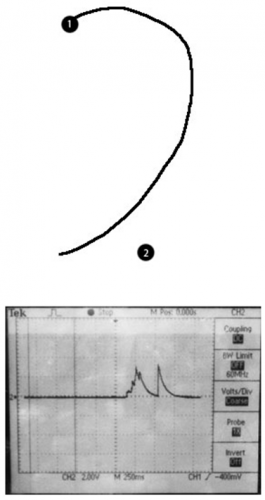

"Our project utilizes a microphone placed in a stethoscope to recognize various gestures when a fingernail is dragged over a surface. We used the unique acoustic signatures of different gestures on an existing passive surface such as a computer desk or a wall. Our microphone listens to the sound of scratching that is transmitted through the surface material. Our gesture recognition program works by analyzing the number of peaks and the width of these peaks for the various gestures which require your finger to move, accelerate and decelerate in a unique way. We also created a PC interface program to execute different commands on a computer based on what gesture is observed."

Permalink

Some further resources for those interested in acoustic HCI, over past 4-5 years a group in the EU has done both dsp-based acoustic gesture recognition such as above as well as 2-d triangulation-based drawing.

http://www.taichi.cf.ac.uk/

a lot of their acoustic interface related research papers and publications in PDF form here: http://www.taichi.cf.ac.uk/publications